Unicode, UTF-8 and multilingual text: An introduction

Unicode and OpenType: Characters and glyphs

Modern TeX engines, i.e., XeTeX and LuaTeX, have evolved from Knuth’s original TeX engine largely due to the need to keep pace with developments in the technology landscape, particularly Unicode (for text) and OpenType (for fonts). Today, through the use of packages such as fontspec and unicode-math, LaTeX users can access extremely sophisticated typesetting capabilities provided by OpenType fonts—including advanced multilingual typesetting and OpenType-based mathematical typesetting (pioneered by Microsoft).

However, to get the most out of using OpenType fonts with XeTeX/LuaTeX, it can be helpful to become familiar with a number of background topics/concepts—especially for troubleshooting problems or to pave the way for more advanced/complex work. For example, you might read about the XeTeX and LuaTeX engines using “UTF-8 input” or that they are “Unicode aware”, and further reading on OpenType fonts might discuss or mention topics such as “Unicode encoding”, OpenType “font features”, “glyphs”, “glyph IDs”, “glyph names” and so forth. Our objective is to provide an introduction to these terms/topics and piece together a basic framework to show how they are related and, hopefully, provide support for further work or problem-solving.

The topics we aim to cover fall fairly neatly into two main areas: Unicode which, in effect, inhabits the world of text/characters and text encoding and OpenType whose world is one of fonts and glyphs; but, of course, those two worlds are interconnected and there is some crossover, even in this first article.

Which topics are we going to discuss?

The main focus of this article is some Unicode-related topics: starting out with a discussion of what is meant by a “character” and moving on to introduce scripts/languages, Unicode encoding and UTF-8—together with an example of working with multilingual text files. A follow-up article will build on this piece to cover background topics related to OpenType font technology. Clearly, within the confines of a blog post it isn’t possible to attempt a “deep dive” into all the areas we hope to discuss: our stated aim is to provide the overall framework showing how a few key concepts are related and work together. We’ll start with the most basic concept: that of the character.

The character: A basic building block

A fundamental idea/concept which is at the heart of our discussions (and that of Unicode), is the meaning of a “character”: it is one of those words whose meaning is often “assumed” through its use in day-to-day work and conversations. However, from the perspective of Unicode, typesetting and font technologies, we need to be a little more precise and define what is meant by “a character”. For example, it might be quite natural for us to think of a and a as different “characters”: ‘bold a’ and ‘italic a’. But not so: they are merely different visual representations of the same fundamental character, which Unicode gives the official name LATIN SMALL LETTER A.

Unicode defines a character as:

“The smallest component of written language that has semantic value; refers to the abstract meaning and/or shape, rather than a specific shape...”

which clearly distinguishes between a character’s specific visual appearance and its meaning.

You can think of a character as the fundamental unit, or building block, of a language or, more correctly, a script—a topic we discuss below. What a character actually looks like when displayed using a particular font is not relevant to Unicode’s definition of a character: only the meaning is of real interest here: the role and purpose of each character as one of a set of building blocks from which scripts/languages are ultimately constructed.

Script and language

It is worth briefly mentioning two important concepts: scripts and languages. The Unicode web site provides a useful definition of a script:

“The Unicode Standard encodes scripts rather than languages. When writing systems for more than one language share sets of graphical symbols that have historically related derivations, the union of all of those graphical symbols is treated as a single collection of characters for encoding and is identified as a single script.”

Using an example from Wikipedia, the Latin script is comprised of a particular collection of characters which are used across multiple languages: English, French, German, Italian and so forth. Of course, not all characters defined within the Latin script are used by all languages based on the Latin script—for example, the English alphabet does not contain the accented characters present in other European languages such as French or German.

OpenType fonts: scripts and languages

At this point we’ll crossover from Unicode into OpenType fonts because the concepts of script and language also play an extremely important role within OpenType font technology.

A set of languages which use the same script may each have different typographic traditions when it comes to displaying (typesetting) text written in a particular language. A good example is to be found in the Turkish language and the behaviour of the dotless i (see that page’s notes on ligatures). Typographic “rules” relating to scripts/languages are built into the functionality of OpenType fonts through the use of so-called script and language tags which are used to identify rules that should apply to particular script/language combinations. Naturally, the set of scripts/languages supported by each OpenType font will vary according to the choices made by the font’s creators and the reason for producing it. Sophisticated typesetting software, such as XeTeX or LuaTeX, can take advantage of those rules (built into OpenType fonts) by allowing users to selectively apply them to the input text when typesetting text in a particular language—for example, by using the LaTeX fontspec package.

Looking inside an OpenType font: scripts/languages

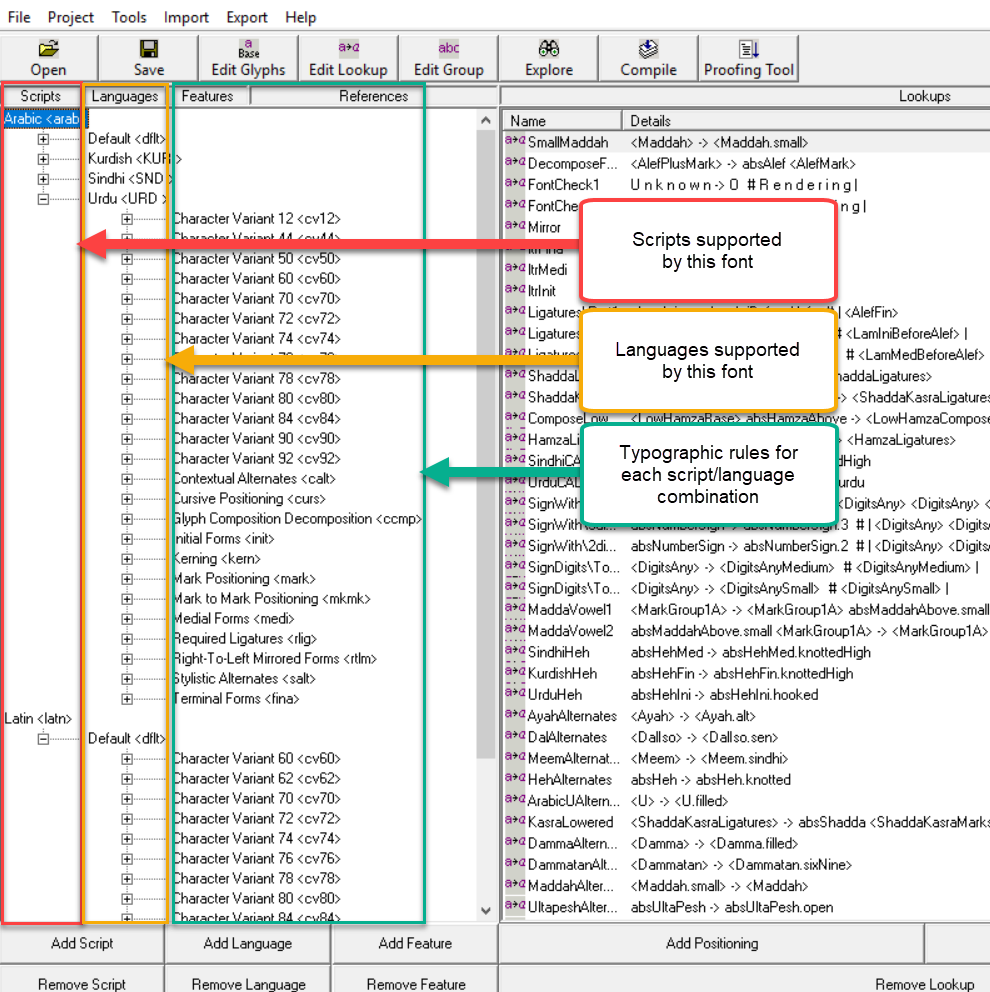

Just to make this clearer, here is a screenshot showing the free Scheherazade OpenType font opened inside the (also free) Microsoft VOLT font-editing software. In this image you can see the scripts, languages and typographic features that are built into Scheherazade—using VOLT you can add extra features and functionality to Scheherazade, but that’s far outside the scope of this article!

From this screenshot you can see that Scheherazade supports the Arabic and Latin scripts and provides further specialist support for several languages that use the Arabic script—using so-called OpenType features, which are listed in the green-bordered box above. We won’t go into the specifics of these features but the message here is that high-quality OpenType fonts have a lot of intelligence built into them, ready for use by typesetting software capable of taking advantage of typographic rules built into fonts.

The interested reader can browse the OpenType tag registry to see the script tags and language tags currently used within the OpenType specification.

Back to characters: Different character roles

The set of characters which comprise the fundamental elements of a script (or language) don't all perform the same role. For example, in most languages there are characters for punctuation, characters for numeric digits as well as the characters that we think of as letters of the alphabet which, for some scripts, also exist is uppercase and lowercase forms. The concept of a character is quite broad and the Unicode Standard includes specialist characters which are not designed to be displayed but whose job it is “to control the interpretation or display of text”. For example, when typesetting some Arabic text you may wish to force, or prevent, the joining behaviour of certain characters; the Unicode standard provides special control characters to do this: the so-called ZERO WIDTH JOINER and the ZERO WIDTH NON-JOINER. Those characters are not intended for display and are “absorbed” by software whilst processing the text in order to produce their intended visual effects.

All characters specified within the Unicode standard are assigned a set of properties which, in effect, describes the role and purpose of each character in the Unicode encoding—character names, such as LATIN SMALL LETTER A, are just one element of a character’s property list. These properties are fully described in the Unicode Character Database (UCD) and are widely used in computerised text processing operations such as searching, sorting, spell-checking and so forth. Data files listing Unicode character properties are also available for download.

Among the properties allocated each character, the most important one for our discussion is a numeric identifier assigned by its Unicode encoding, a topic we now turn to.

Characters: Numbers and encodings

It’s a statement of the obvious but computers and other digital devices are in the business of storing and processing numeric data: so how does this relate to text? As you write some text using a computer keyboard, or by tapping the screen of a mobile device, your keystrokes are turned into numbers which represent the string of characters you are typing.

At some point you may wish to transfer that text (a sequence of numbers) through an e-mail, a text message or via online communication such as a Tweet or a post on some form of social media. Clearly, the device on which you composed the text and the device(s) used by its recipient(s) must, somehow, agree on which numbers represent which characters. If not, your text might not be displayed correctly on the recipient’s device.

For today’s global communications to work, sending and receiving devices need some “mutually agreed convention” through which a particular set of numbers represents a specific set of characters. This convention is called an encoding: a set of numbers used to represent a particular set of characters and the Unicode encoding is now the de facto global standard.

Unicode: bits and bytes for storing text

Unicode is an enormous standard which covers far, far more than just text encoding but here we are focussing only on the encoding it provides.

Bits, bytes and how many characters?

We mentioned that devices store and represent text as numbers—specifically, characters will stored as integers: whole numbers. To understand the implications of this for Unicode encoding, we need to have a very brief, very basic, review of how computers store integers (we don’t intend to venture into computer science).

To cut short a very long story, today’s desktop or handheld devices store integers in discrete “chunks” which can be 1, 2, 4 or 8 bytes long. Each of these storage units can store integers up to a maximum positive value based on the total number of bits contained in each storage unit:

- 1 byte (8 bits): maximum positive integer is 255;

- 2 bytes (16 bits): maximum positive integer is 65535;

- 4 bytes (32 bits): maximum positive integer is 4,294,967,295;

- 8 bytes (64 bits): maximum positive integer is 18,446,744,073,709,551,615.

In practice, the Unicode standard uses numbers in the range 0 to 1,114,111 to encode all the world’s characters, with the result that it needs just 21 bits to encode the full range. We can see this by noting that storage units containing n bits can represent any positive integer from 0 up to a maximum value of \(2^n -1\); consequently:

- the maximum value that can be stored in 20 bits is \(2^{20} -1 = 1,048,575\) (too small);

- the maximum value that can be stored in 21 bits is \(2^{21} -1 = 2,097,151\) (large enough).

We’ve noted that computers store data (numbers) in units of 1, 2, 4 (or 8) bytes, so how big does the storage unit need to be if we have to store values up to the maximum Unicode value of 1,114,111? Clearly, a byte-sized storage unit can contain a maximum value of 255 and 2 bytes can store 65535: neither of these is sufficient to store the full range of characters encoded by Unicode. The next available option is storage units whose size is 4 bytes, which can store integers up to a maximum of 4,294,967,295 which is far more than we’d actually need. So, if we chose 4 bytes as our storage unit we certainly have more than enough room to store all the Unicode values, with each character being stored as an integer requiring 4 bytes (32 bits). However, using 4 bytes to store everything is very wasteful of space because even the largest Unicode values need a maximum of 21 bits—which, if stored using 32 bits, would mean that 11 out of those 32 bits would never be used.

Note: Although the Unicode range spans from 0 to 1,114,111 not every value in that range is actually used: for technical reasons, some values are considered to be invalid for actual use as Unicode characters.

So, what is UTF-8?

If you read about XeTeX or LuaTeX you are almost certain to encounter explanations which state that those TeX engines read text and LaTeX input files in “UTF-8 format”. So what is “UTF-8 format” and how does it relate to Unicode? In Unicode parlance, each one of its 1,114,112 values (ranging from 0 to 1,114,111) used to encode the world’s characters is called a code point.

We’ve seen that, in theory, we’d need to store all our Unicode-encoded text using 4 bytes per character in order to represent the full range of Unicode’s code points. However, in practice, some rather smart people invented a simple way to represent a single Unicode number (code point) as a sequence of smaller numbers, each one of those smaller numbers is stored in a single byte: a process which transforms a single (larger) integer into a sequence of smaller (byte-sized) ones. Because of this transformation, the characters of our text file are no longer each represented by a single numeric value: each character becomes a multi-byte sequence—anything from 1 to 4 (consecutive) bytes in the text file can represent a single individual Unicode character (i.e., its code point value).

UTF stands for Unicode Transformation Format and the key word here is Transformation. In essence, you can think of UTF-8 as a “recipe” or algorithm for converting (transforming) a single Unicode code point value into a sequence of 1 to 4 byte-sized pieces. As the value of the Unicode code point increases so does the number of single bytes required to represent it in UTF-8 format.

There are technical and historical reasons for creating UTF-8 and the story behind the invention of UTF-8 is recorded in a fascinating e-mail from 2003, which, near the beginning of the e-mail, contains the line:

“That's not true. UTF-8 was designed, in front of my eyes, on a placemat in a New Jersey diner one night in September or so 1992.”

An example: the Arabic letter ل

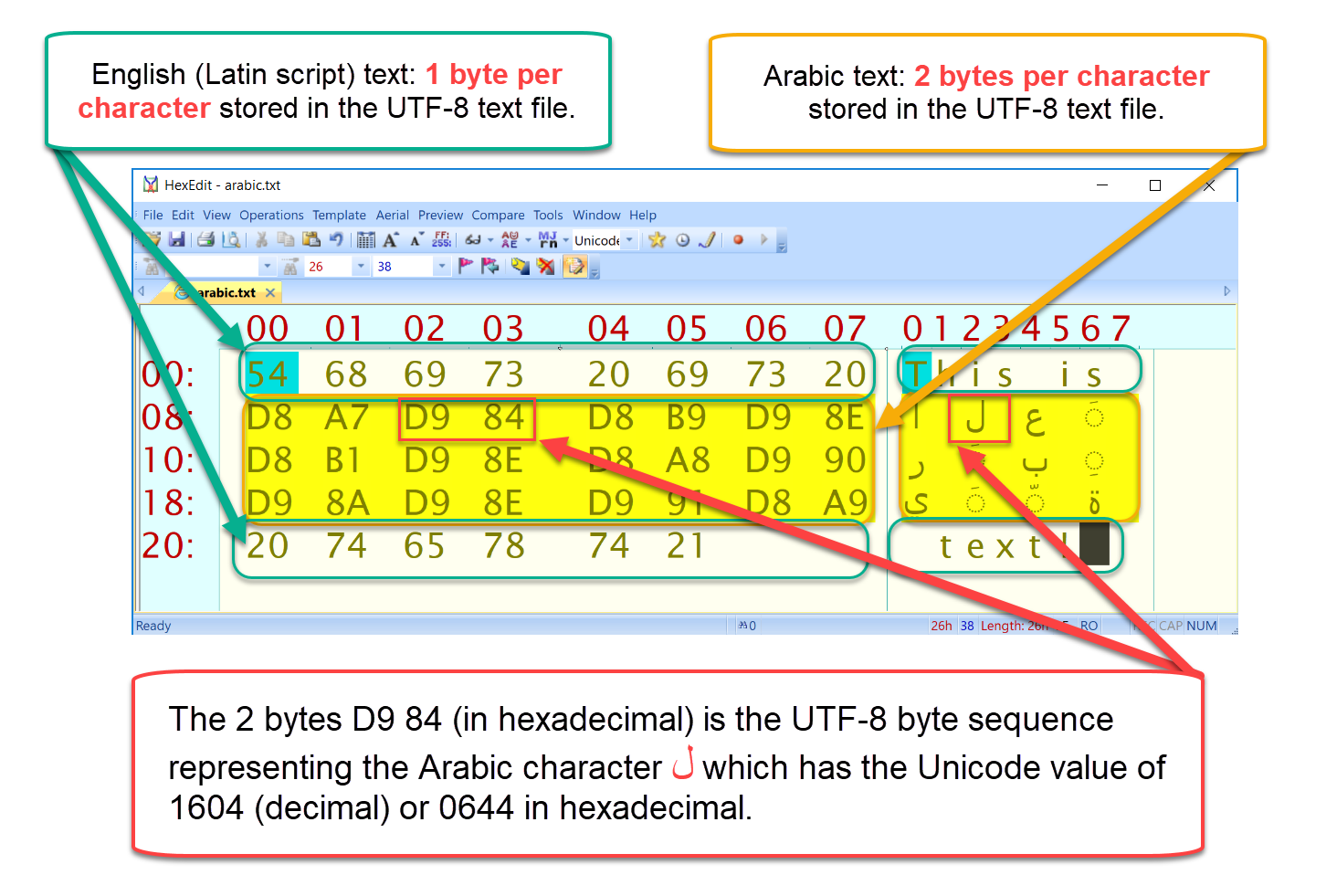

Let’s take an example of the Arabic letter ل (Unicode name ARABIC LETTER LAM) which is allocated the Unicode code point value 1604 (decimal) or 0644 (hexadecimal): its representation in UTF-8 is the two-byte sequence D9 84 (hex) or, in decimal, 217 132. When using UTF-8 as the format for storing text, rather than a text file containing the single number 1604 to represent ل, it is converted into two byte-sized values: 217 and 132—the character ل is stored as a two-byte sequence. Readers who want to explore the UTF-8 algorithm in more detail can find an in-depth explanation, and C code, on my personal blog site.

When a piece of software (e.g., XeTeX or LuaTeX) reads text in UTF-8 format, that software needs to determine the Unicode value for each character present in that file, so it uses an algorithm to reverse the UTF-8 transformation process. Through that “reversal algorithm” the two bytes (217 and 132) are recombined to generate the integer 1604, which can then be recognized as the Unicode code point value for the Arabic letter ل.

So, in conclusion, UTF-8 really is just an intermediate data format used for the storage and transmission of Unicode-encoded text.

Note: Some systems choose to use/store text using 32 bits per character, this is called UTF-32—there is also UTF-16 but UTF-8 is the most common way to store Unicode-encoded text.

Multilingual TeX files: XeTeX and LuaTeX

Both XeTeX and LuaTeX are capable of very sophisticated multilingual typesetting although their mechanisms for achieving this are rather different and reflect the design/development philosophy of each engine. We won’t explore this in depth but simply note that the XeTeX engine contains software components (built into its executable) which are not present in LuaTeX—most notably software for a process called OpenType shaping (e.g., via a library called HarfBuzz).

LuaTeX, by contrast, adopts a different approach: rather than building facilities directly into the actual TeX engine, LuaTeX provides an extremely rich collection of commands (TeX primitives) and a very powerful Lua-based API through which developers can construct equally advanced solutions for multilingual typesetting. Although the LuaTeX philosophy might entail extra work for LaTeX package developers, it provides a great deal of additional flexibility because solutions are not “hard coded” into the actual LuaTeX engine itself, but are constructed from TeX and Lua code—or plugins written in C/C++.

Aside: Readers wishing to further explore the fascinating, but complex, world of OpenType shaping may be interested to read about the superb open-source library called HarfBuzz—used by many applications including Firefox, Chrome and LibreOffice and, of course, by XeTeX. The author of this article has used HarfBuzz to create LuaTeX plugins to do Arabic typesetting.

It’s now commonplace (e.g., on social media) to transmit text which contains characters from multiple languages and a UTF-8 text file storing multi-language text can easily contain characters whose representation in UTF-8 is 1, 2, 3 or 4 bytes long. So, in effect, a UTF-8 text file is just a stream of single bytes but each actual character in that file could be anything from 1 to 4 bytes long: the individual characters have become multi-byte sequences.

To further explore some key aspects of working with (typesetting) multilingual text we’ll use an example containing the Arabic script because Arabic provides us with scope for addressing multiple concepts.

Aside: the Arabic script

The Arabic script is written in a cursive style which is read and written from right to left. Each Arabic letter can, potentially, adopt one of 4 different shapes according to:

- whether it is displayed as a single, standalone (isolated), character (not joined to anything else);

- whether it occurs within a word—at the start, middle or end of a word: referred to as initial, medial and final forms respectively.

Each character of the Arabic script has its own set of joining rules and may, or may not, change shape/appearance when it has another character to its left, its right or its left and right. Readers interested to further explore this can find a full list on wikipedia.

Example: Arabic and English text in UTF-8

Suppose we create a UTF-8 text file containing a single line of English and Arabic text: This is العَرَبِيَّة text!

This line of text contains 3 space characters, 11 English (Latin script) characters and 12 Arabic characters (although that may not be immediately obvious/apparent). When saved as a UTF-8 text file it occupies 38 bytes of storage, resulting from the following:

- Latin script: spaces plus English text: 14 ✕ 1-byte characters = 14 bytes;

- Arabic script: 12 Arabic characters ✕ 2 bytes per character = 24 bytes.

A total of 14 + 24 = 38 bytes.

Digging deeper

If we save our example text in a UTF-8 file called arabic.txt and open it in a hexadecimal editor we can examine it to see the actual bytes it contains. From a study of the following annotated screenshot you can see that the Arabic text is stored as 2 bytes per character:

A UTF-8 text file containing English and Arabic text open inside a hex editor. You can clearly see that Latin-script characters require a single byte but Arabic-script characters are stored using two bytes per character.

You can make a couple of observations from this screenshot:

- the Arabic text is stored in a left-to-right sequence and the characters are the raw unshaped (isolated) versions of the Arabic letters and vowels;

- there’s no additional information following the Latin script “This is ” to inform any software reading this file that the next character is in the Arabic script.

If you are typesetting a multilanguage document (e.g., containing English and Arabic), then during reading/processing the input text file (as a stream of bytes) XeTeX or LuaTeX must be able to detect the start and end of each character and read the correct number of bytes required reverse the UTF-8 transformation and generate the corresponding Unicode code point. It is the UTF-8 algorithm itself which enables software to do this: enabling detection of the first byte of each individual character and how many bytes need to be read in order to calculate the corresponding Unicode code point. UTF-8 is simple to use, but really quite ingenious.

Logical order, display order and OpenType shaping

If you are look closely at the Arabic above (العَرَبِيَّة) it may be difficult to see that our text file does indeed contain 12 individual Arabic characters—particularly if you are unfamiliar with the Arabic script! However, if you carefully count the Arabic characters displayed on the right-hand side of the screenshot above you can see there are 12 in total.

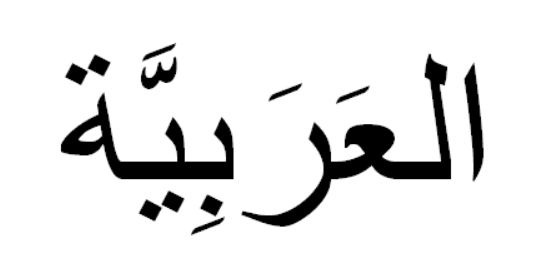

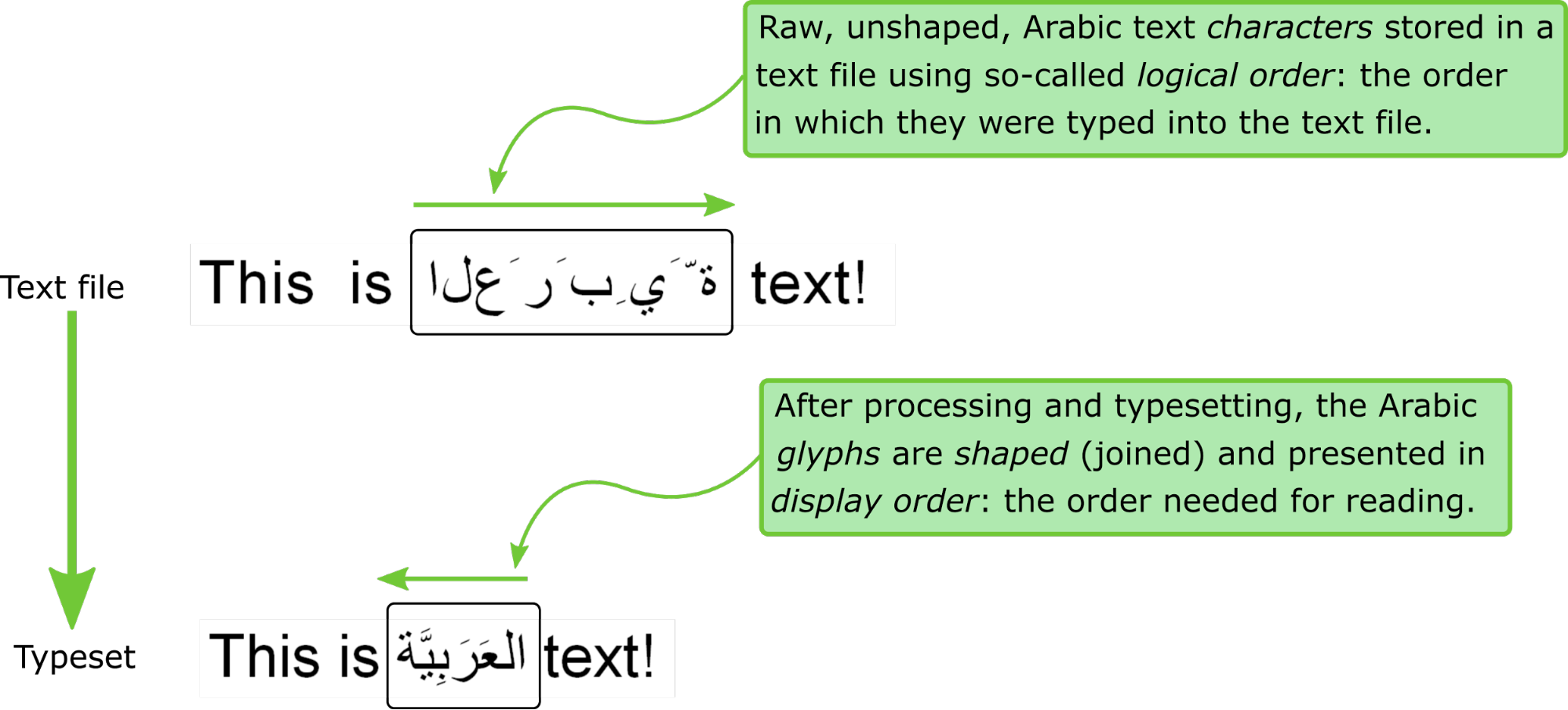

For complex-script languages, such as Arabic, what our text file stores and what you see on screen are visibly very different indeed! What you see when viewing that text in, say, a browser, is (depending on the font used):

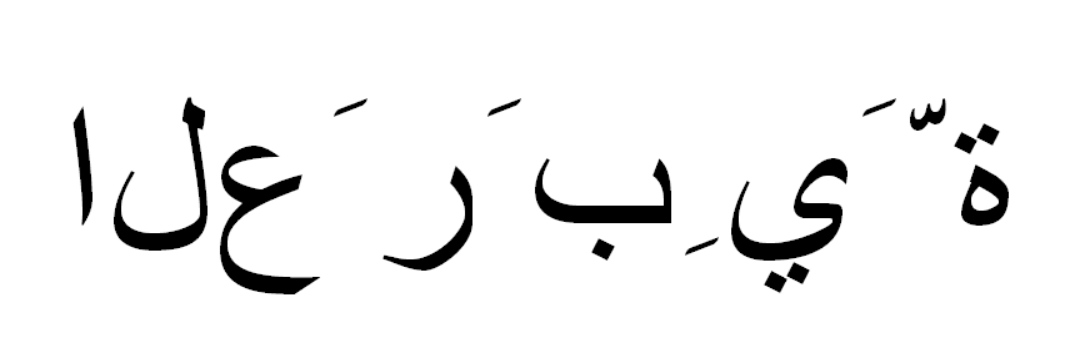

But, as the screenshot above shows, what the UTF-8 text file actually contains is this:

Even if you are not familiar with the cursive nature of the Arabic script you can clearly see that “something” has happened during the course of transferring Arabic characters contained in a text file through to typesetting and/or display on the screen (as glyphs). If you are used to using TeX/LaTeX with simple-script languages, for example Latin-based languages, this can be very confusing indeed!

Some important concepts are at play here because Unicode text files are in the business of storing… well, text (Unicode), and typesetting and display systems are in the business of using fonts and glyphs (OpenType):

- the text file saved the Arabic characters in a left-to-right order but Arabic is read/displayed from right-to-left: text files store text in so-called logical order;

- the text file contains individual characters that look very different to the actual display presented on the screen: the text file contains the Arabic characters in their isolated, non-joined, form.

What’s going on?

Within a text file Arabic is stored as a left-to-right sequence of isolated form characters: if you think about it, the text file stores the Arabic text in the order/sequence in which was typed (the logical order). It is only when that text is processed for display, or typeset, that it displayed in its correct reading order, often referred to as the visual order or display order; in addition, the isolated forms of the Arabic characters are shaped into their typographically correct display versions. One way to think about this is that a simple text file has to store text (Unicode characters) in the most basic form possible: raw, unshaped, individual text characters—it is the task of system software to render those characters for display based on the operating system, fonts and typesetting/rendering software available on the display device.

When the Arabic text in that file is typeset/displayed, it undergoes a process called shaping. The individual Arabic characters are converted into shaped glyphs which correctly represent the variant of each character required according to the joining rules of the Arabic script and writing system. In addition, high-quality typesetting software (using good OpenType fonts) will add further processing by applying additional typographic sophistication through a process called OpenType shaping—a process that encompasses a wide range of typographic operations which can include:

- replacing multiple individual glyphs with a single complex ligature glyph (very common with Arabic), or

- positioning operations that, for example, adjust the positions of Arabic vowels based on which glyph they sit above or below.

The difference between logical order and visual (display) order. In this graphic you can see that Arabic characters stored in a text file undergo re-ordering and shaping when they are displayed or typeset.

Designers and creators of advanced OpenType fonts invest very considerable time and expertise to provide the sophisticated typographic capabilities built into their fonts.

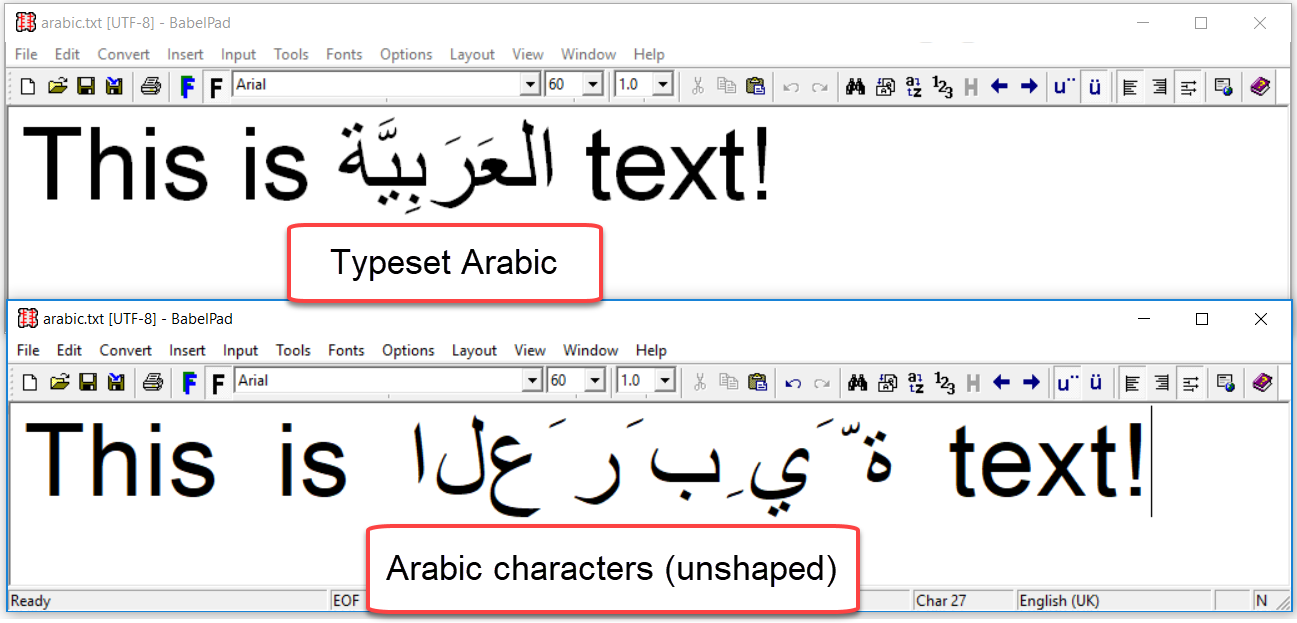

To switch off the shaping being applied to the Arabic text we can use the excellent, free, BabelPad Unicode text editor (Windows only) which lets you disable the shaping to see the raw, individual unjoined (unshaped) characters actually present in the text file—see the lower half of this combined screenshot:

Using the BabelPad Unicode text editor to switch on OpenType shaping (upper figure) or switch it off (lower figure). Switching off OpenType shaping makes it much easier to edit Arabic text.

The concepts of logical order and display order, coupled with the processes of shaping, can be quite confusing when you first encounter them during editing or typesetting with multilingual text files containing complex scripts such as Arabic: hopefully, the above has helped to avoid some initial confusion.